Robot Autonomy: The Next Frontier

A journey into the heart of robotics

We live in an unprecedented age of exploration, where humans will return to the moon, venture to Mars, and someday travel far beyond. Robots will continue to play a pivotal role in these endeavors, assisting in exploring distant worlds. The capability of robots to be autonomous is critical, especially when direct human oversight is difficult or impossible. Meanwhile, back on Earth, robotics is already transforming industries and our daily lives, from AI-driven robotic arms assembling cars to drones monitoring crops.

My passion for robotics started at a young age—growing up in Silicon Valley, I’ve been involved in robotics since high school when I joined an all-girls robotics team. At the same time, I had been doing astrophysics research at a local university when I realized my dream was to combine my two passions—to work on robots for space exploration! Since then, I have nurtured my passion for robotics through working on different projects in industry, research labs, and even with friends—and it has been nothing short of exciting.

In this article, I’ll walk you through the following sections:

What makes me passionate about robot autonomy

Why robot autonomy is important now

Some of the basics of robot autonomy

Cool robotics industry and research applications

Stewardship and collective responsibility of robot autonomy in our society

By the end of the article, I hope to share the excitement and potential of robot autonomy. Whether you're a student, educator, professional, or simply a curious mind, there are myriad ways to get involved. Let's embark on this journey together!

Why Robot Autonomy is So Cool ✨

Why am I passionate about robotics? Personally, I love the interdisciplinary aspect of robotics. It can involve a lot of theory and math, as well as low-level systems and hardware—it’s full-stack! There are also beautiful connections with many fields, including physics, cognitive science, optimization, control theory, and more.

More than that, I am drawn to robotics because it helps me appreciate humans' remarkable capability to learn, perceive, and act in the world. In addition to drawing inspiration from humans, we can also draw inspiration from nature—from how birds fly with agility and efficiency to how an ant colony can build complex and intricate structures and even what fish schools can teach us about intelligence.

Why Now? ⏱️

While people have been dreaming up and inventing automata for centuries, the first modern robot was created in the 1950s. Since then, the capabilities of robotics have gone from imagination to reality.

We are now in an incredible time for robotics—the intersection of large-scale data and information, new hardware technologies, and the advent of new algorithms creates a period of unprecedented technological change. The development and widespread availability of hardware, such as NVIDIA Graphics Processing Units (GPUs) and Google Tensor Processing Units (TPUs), have provided the computational horsepower necessary to process and analyze vast amounts of data. This capability is critical for training complex AI models, including foundation models (FMs) like Large Language Models (LLMs) and diffusion models, revolutionizing how machines understand and interact with the world. With these advances and more, AI and robotics will only continue to grow in the next decade and beyond to meet the growing demands of our society.

Understanding Robot Autonomy 🧠

What is robot autonomy? Robot autonomy is the ability of a robot to sense its environment, process information, and make decisions to complete tasks without constant human intervention. There is ongoing research on making robotics more “end-to-end”—directly translating sensor data (such as image pixels) into robot actions (control input on the robot). However, traditionally, the robot autonomy stack comprises the following “sense-plan-act” pipeline:

Perception 👁️

Perception in robotics is the process of interpreting and understanding the environment through sensor data. This understanding is crucial for robots to perform tasks autonomously and interact safely with the world around them.

One of the significant challenges in robot perception is the inherent ambiguity and complexity of the real world, which is often unstructured and dynamic. Factors like varying lighting conditions, object occlusions, and even changes in perspective can significantly alter the sensory data a robot receives from its environment. For instance, a shadow cast by an object can drastically change its appearance to a camera sensor, and a LiDAR sensor may struggle to map a highly reflective object surface accurately.

Robots can rely on many sensors, including cameras to capture images, LiDAR for 3D mapping, and radar to detect objects. Robots also often have Inertial Measurement Units (IMUs), which measure acceleration and angular velocity to determine their orientation and movement.

Computer vision plays a pivotal role in robotic perception. Deep learning, inspired by the human brain's structure and function, has significantly advanced the field, enabling robots to perform tasks that were previously extremely difficult or unachievable. Deep learning techniques like convolutional neural networks (CNNs) enable robots to perform tasks like object detection and scene understanding.

State Estimation 🤳🏻

State estimation is a foundational aspect of robotics. It involves accurately determining the robot’s state (such as position, orientation, and velocity) in 3D space.

Let’s explore some of the key concepts in state estimation: sensor calibration, state estimation techniques such as the Kalman Filter (KF) and Extended Kalman Filter (EKF), and applications of state estimation such as Visual-Inertial Odometry (VIO) and Simultaneous Localization and Mapping (SLAM).

Sensor calibration: Building on the perception section, we covered how robots use various sensors (like cameras, LiDARs, and IMUs) to perceive their environments. However, these sensors have inherent noise and biases that can introduce measurement errors. Sensor calibration is the process of minimizing these errors, ensuring the data fed into state estimation algorithms is as accurate as possible. Calibration may involve complex modeling of sensor behaviors before and during robot operation, including adjustments for environmental factors like temperature, which can (surprisingly) significantly affect sensor readings, such as distorting camera images.

State estimation techniques: These techniques use sensor data and mathematical models to provide an estimate of the robot’s state despite the noise and uncertainties. One common approach to state estimation involves using Kalman Filters (KF) and their variants.

The Kalman Filter is designed to estimate system parameters in the presence of inaccurate or noisy measurements by constantly refining the state estimate with incoming sensor data. It is used to model linear systems with Gaussian noise, and it excels due to the relative simplicity of solving linear equations. However, the world of robotics frequently deals with nonlinear dynamics, which introduces complexity. Extended Kalman Filter (EKF) addresses this challenge by linearizing the system model around the current state estimate.

Applications of state estimation: Visual-inertial odometry (VIO) uses data from a camera and an IMU to estimate the robot’s motion (odometry) over time. The camera provides visual information about the environment, while the IMU provides measurements of the robot’s movement. By fusing these sensor measurements using state estimation techniques (often EKFs), we can track the robot’s position and orientation.

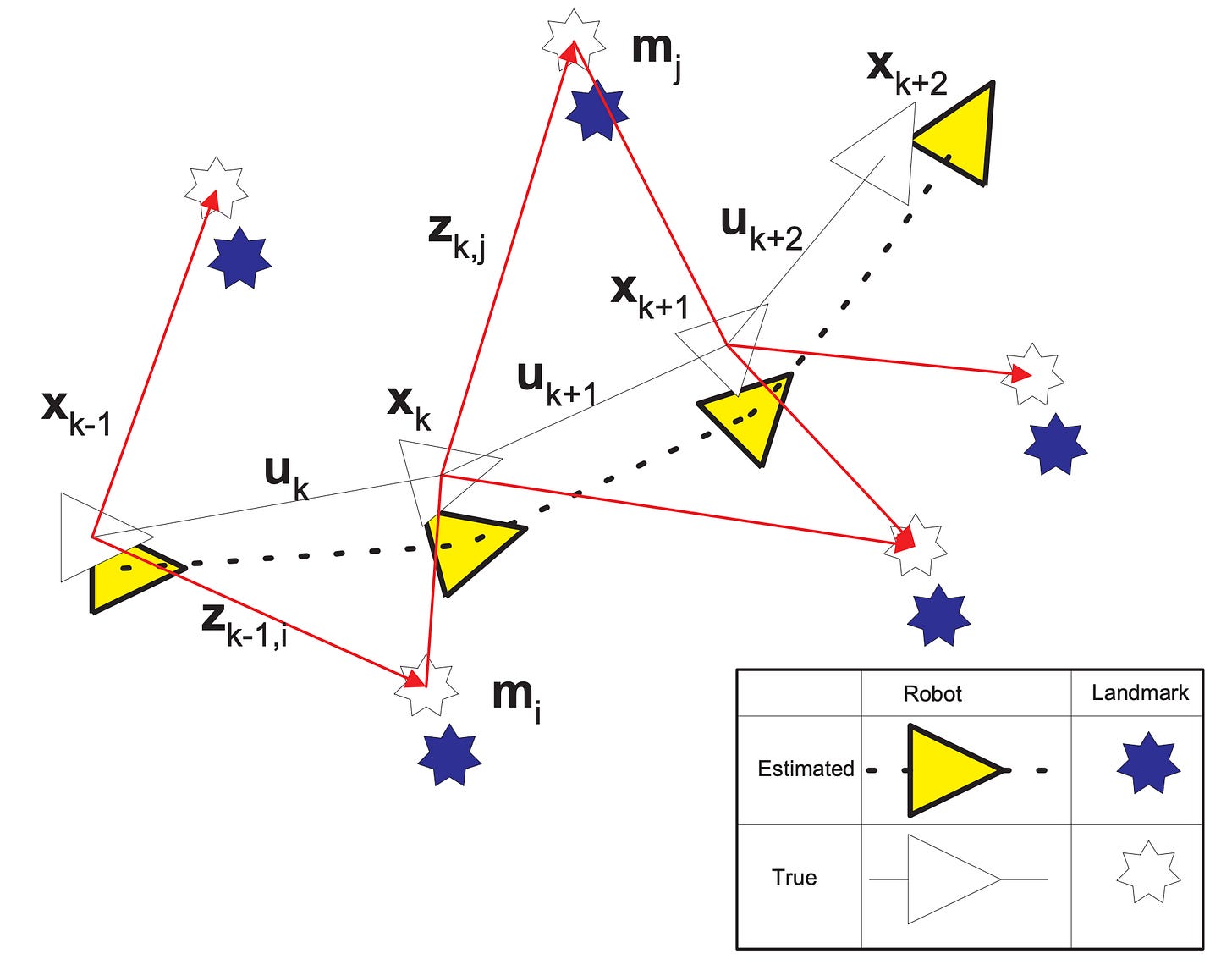

Simultaneous Localization and Mapping (SLAM) addresses the challenge of mapping an unknown environment while navigating within it. This “chicken and egg” problem develops a reliable map while accurately estimating the robot’s location on that map. Through SLAM, robots can explore new spaces.

Planning 🗓️

Now that we have an estimate of the current state of our robot and environment, a robot can tackle the crucial task of planning. Planning algorithms allow the robot to plan paths and tasks autonomously to achieve its desired goals.

Robot planning is rich with diverse algorithms, each tailored to specific scenarios and presenting advantages and limitations. Here, we’ll discuss two prominent planning paradigms: search-based and sampling-based.

Search-based algorithms use a concept of a “cost function” that the robot wants to minimize on its path. This can be factors such as distance traveled, energy consumption, or time taken. These algorithms typically require discretizing the environment into a grid. A* search is a widely used grid-based algorithm for finding the shortest path regarding a defined cost function.

Sampling-based algorithms, on the other hand, work by taking “samples” in a robot’s environment for path planning, where samples represent a potential path the robot can take. Collision-checking then becomes critical, ensuring the robot avoids obstacles on its path. Since these algorithms explore a vast number of possibilities, they are inherently probabilistic, meaning they may not always be the shortest or most efficient path. An example of a sampling-based algorithm is Rapidly-Exploring Random Tree (RRT), which searches for paths in complex environments.

Different planning algorithms have strengths and weaknesses, making the choice resource and task-dependent.

Controls 🦾

We created a plan—now we have to execute and adjust our actions to achieve these planned outcomes.

The foundation of robot control lies in the equations of motion, which can be formulated with Newtonian mechanics for direct force analysis or Lagrangian mechanics, which accounts for energy in the system. These equations act as mathematical models that are crucial for predicting robot dynamics. By considering the forces and torques acting on the robot (such as gravity), these equations predict how the robot will move.

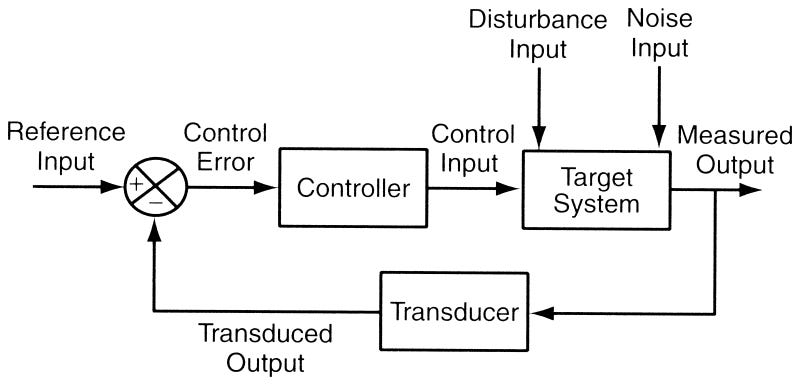

In the real world, there are often many disturbances and uncertainties—that’s where feedback control systems come in. By continuously monitoring the robot’s actions through sensors, these systems provide real-time data that is fed back into a controller. The controller then compares the actual motion with the planned motion and makes adjustments to ensure the robot stays on track. For instance, if a drone encounters wind during flight, the feedback controller can correct this disturbance.

There are many different control strategies. One such strategy for systems that can be approximated as linear is the Linear Quadratic Regulator (LQR), which uses a cost function to find the optimal control strategy. While LQR is a powerful tool for linear systems, real-world robots are often nonlinear. In these cases, we can use control strategies such as Model Predictive Control (MPC), which considers the robot’s current state and future behaviors to optimize control decision.

Additional Resources 📄

This is a non-exhaustive overview of some robotics concepts! For some more information on some of these topics and to dive deeper, feel free to check out these resources:

Textbooks

University courses

Princeton’s Introduction to Robotics, taught by Anirudha Majumdar (link)

UMichigan’s Mobile Robotics, taught by Maani Ghaffari (link)

MIT Underactuated Robotics, taught by Russ Tedrake (link)

MIT Robotic Manipulation, taught by Russ Tedrake (link)

Stanford Deep Learning for Computer Vision, taught by Fei-Fei Li and Ehsan Adeli (link)

CMU Planning Techniques for Robotics, taught by Maxim Likhachev (link)

Seminar series

Podcasts

Robotics Applications in Industry, Government, and Universities 🤖

Here are some cool robots in industry, government, and universities:

Mapping and inspection (from Skydio):

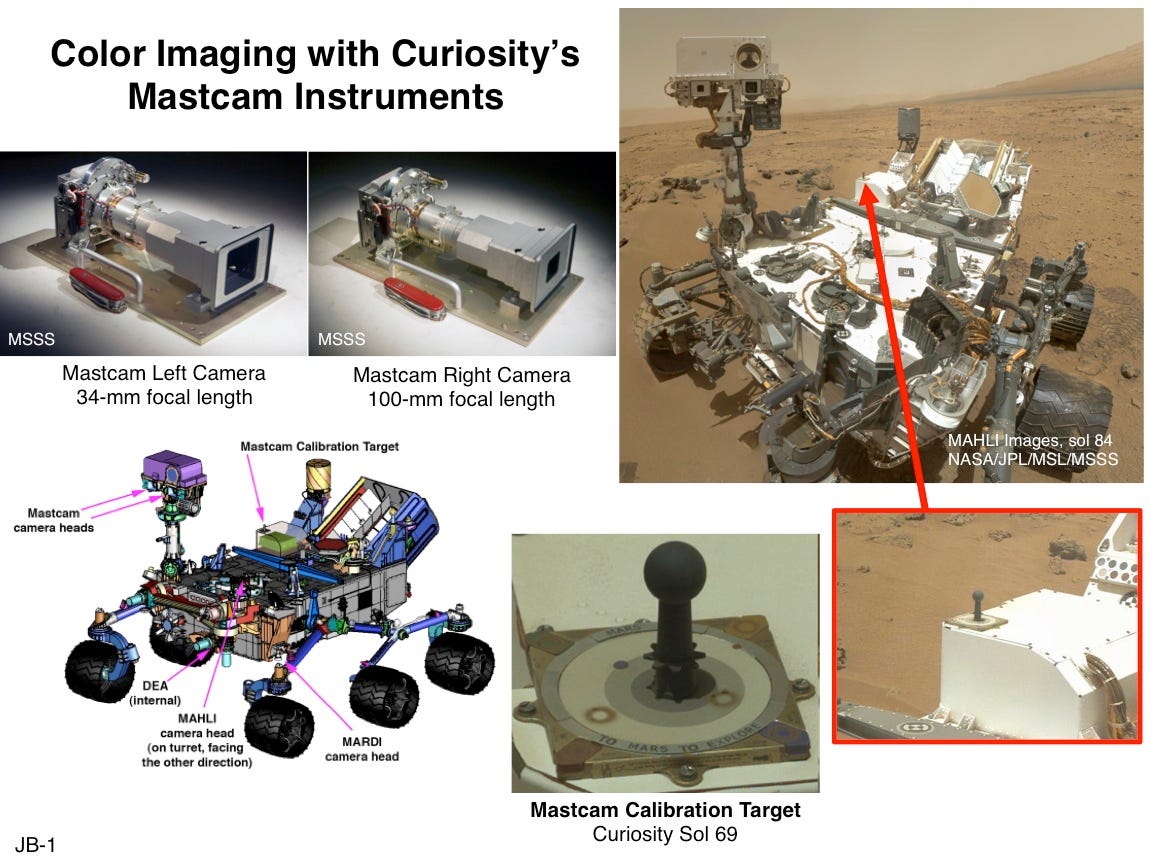

Space exploration (from NASA JPL):

Healthcare (from Zipline):

Drone racing (from ETH Zurich):

Manipulation (from Toyota Research Institute (TRI)):

Aerial manipulation (from ETH Zurich):

And there are so many more! The amount of progress and opportunities in robotics is large and growing.

Robotics and Stewardship 👯

Beyond robotics exploring extreme space environments, autonomous robots are transforming society as we know it. As AI and robotics continue to advance, it is crucial to acknowledge that robotics alone will not solve humanity’s greatest problems, such as climate change, poverty, inequality, and barriers to education and healthcare. Robotics will continue to impact the economy, displacing jobs and creating social and political unrest.

Fostering a greater connection with nature and community while prioritizing health and wellness is vital, in my view. Robotics can support these goals, yet they cannot fully achieve them on their own—and sometimes, they can even be competing goals. This underscores the importance of integrating the humanities—like philosophy, art, and literature—into the education and professional development of engineers and scientists. Our aim should be to enhance the human condition, not just optimize for wealth and material gain.

The ethical considerations of robotics, including regulations and legal frameworks, cannot be an afterthought—they must be built into the systems we create, from conception to deployment. Achieving this vision requires contributions from people from all sectors and disciplines to collectively build the future we want to live in.

If you enjoyed this article or have any comments, feedback, or questions, please email us at admin@theoverview.org. Thanks for reading!

Stay curious,

Maggie

My Dad was a career-long engineer ... started with Hamilton Standard and Pratt & Whitney, went to Westinghouse, and ended his career with Northrop Grumman. That's probably why, when I chose journalism as my first career, and focused a great deal on technology stories (especially in the defense/aerospace sector), I always had great respect for what he was able to do. And he always made what he did exciting and approachable. You have that same skill. Looking forward to more of your work.

Amazing! 👏👏