The Future of In-Space Computing

Why compute in space has been different… until now.

The flagship Mars 2020 mission executed groundbreaking entry, descent, and landing algorithms to successfully land on the Martian surface. Surely, that required unprecedented computing power… right?

Well, the processor at the helm of the operation was the BAE RAD750, originally released in 2001. For reference, the RAD750 was marketed at 300 million instructions per second (MIPS), whereas a modern household CPU – the AMD Ryzen 9 7950X – was recently benchmarked at 258.94 billion instructions per second (GIPS). What gives? Why wouldn’t we launch the most capable CPU on the market?

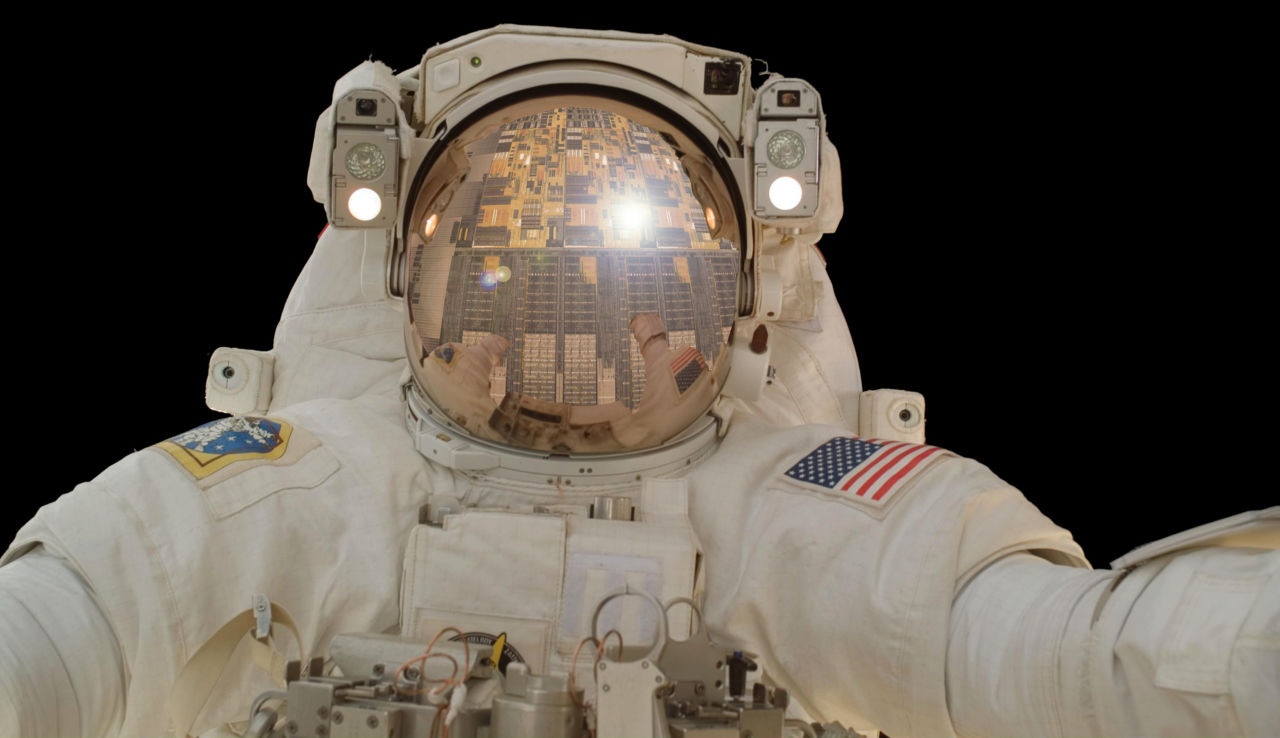

Since the beginning of launching objects to space, terrestrial computers and space-based processors were never compared against each other — their design criteria were just too divergent. The household computer may be optimized for streaming 4k video, playing a game at a high frame rate, with a relatively inexhaustible stream of power from a wall outlet. The spacecraft might 1. be continually bombarded with radiation in 2. an extremely low power environment with 3. no ability to service or update the hardware for its entire life. While all three of these pose the need for fascinating workarounds, the radiation landscape provides a window into why engineering in space is that much harder.

The harsh radiation environment of space

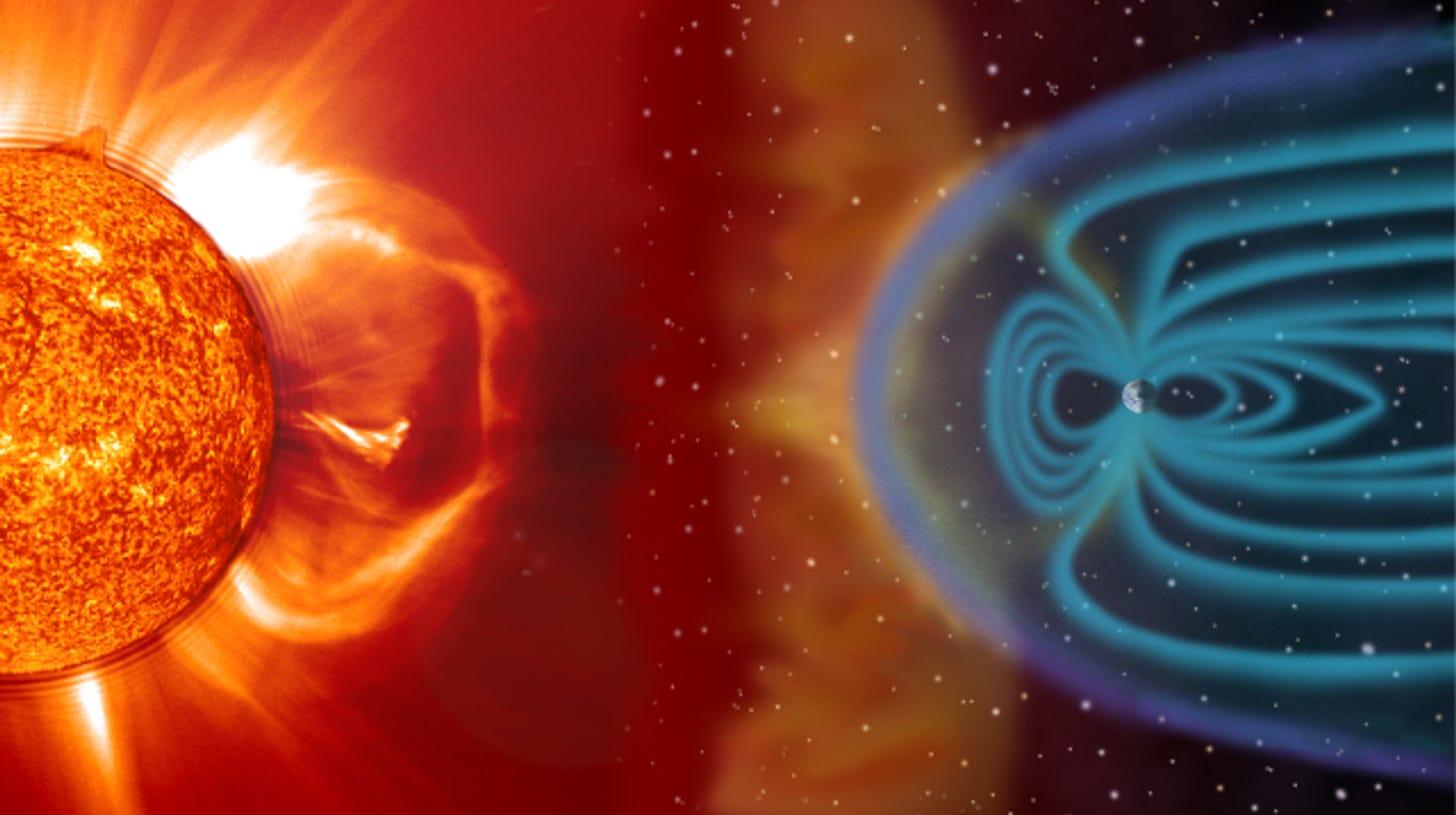

On Earth, we have the luxury of not thinking about DNA-wrecking radiation too much. Earth’s glorious magnetic field and atmosphere shield the ground from the bulk of space radiation. However, as soon as a mission ventures into low-Earth orbit (LEO) and beyond, the protective power of these luxuries dwindles within a few minutes after launch.

In an attempt to make sense of the complicated landscape of radiation sources, types, and effects, let’s begin to group them into subclasses.

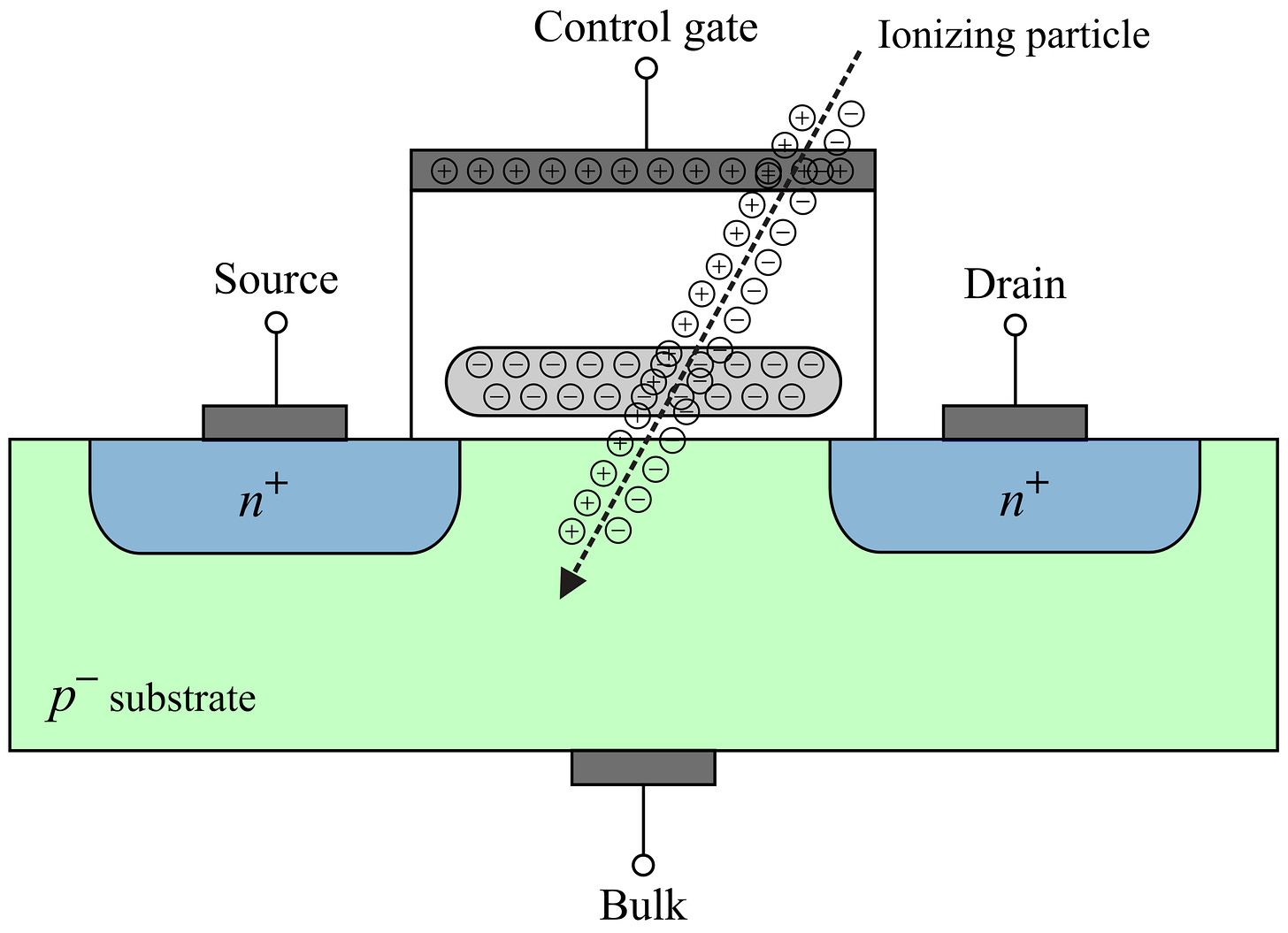

There is ionizing radiation and non-ionizing radiation. In general, ionizing radiation is the one we are worried about, as it acts like a truck ramming into the side of the building, causing disruptions to the building far beyond the sight of the impact. Ionizing radiation can pass through the bulk of a material and alter/damage it along the way. Just as ionizing radiation is bad news for the human body, it is bad news for the electronics onboard a spacecraft too.

There are three main sources of ionizing radiation:

Galactic cosmic rays (GCRs): heavy, high-energy ions hurdling toward Earth at a significant fraction of the speed of light. They contain:

89% hydrogen nuclei (protons)

10% helium nuclei (alpha particles)

~1% heavier nuclei

Solar energetic particles: particles ejected from the sun. They contain:

protons

neutrons

electrons

heavier ions (like oxygen)

some gamma rays

Van Allen belts: volumes that take shape along Earth’s magnetic field lines that trap charged particles. They contain:

Mostly protons in the inner belt (around 4,000km altitude)

Mostly electrons in the outer belt (around 20,000km altitude)

How radiation affects electronics

For a space mission, the effects of radiation can be conceptualized as long-term degradation of electronics, known as total ionizing dose (TID), or single event effects (SEEs), with the two main subcategories of SEEs being single event upsets and latch-up. The nomenclature of a processor being “radiation-hardened”, “radiation-tolerant”, or “space-grade” refers to how robust the processor is to the above radiation effects (with different companies defining these terms slightly differently).

On a typical satellite mission, the Van Allen belts, solar energetic particles, and galactic cosmic rays can all be the culprits causing TID. TID is often associated with “loss of mission”, meaning once your processor has experienced TID, that’s a wrap for your mission. But, thankfully, we have some trivial ways to protect against it. Relatively simple radiation shielding can protect against low and high-energy electrons, low-energy protons, but not high-energy protons. You can modulate the thickness of your radiation shield to extend the mission lifetime (becoming more robust to TID).

Single event effects, on the other hand, are a little trickier, as they can manifest themselves in mysterious ways. For one, SEEs can cause bit flips, which can corrupt processes anywhere in the processor. The impact of the bit’s flip can vary widely, from being completely inconsequential to causing a mission-critical failure. In general, tracking SEEs can be difficult to impossible, as the computer only theoretically obtains real-time information about the SEEs along the execution path.

The processors that boast a “radiation-hardened” label include shielding and typically include mounting components on insulating substrates (as opposed to conventional semiconductor wafers). The broader classes of “hardening” are known as radiation hardening by design, and radiation hardening by process:

Radiation hardened by design: leveraging special chip/PCB layouts and even redundancy within the layout. This could include error-detecting and correction circuits

Radiation hardened by process: silicon on sapphire (SOS), or silicon on insulator (SOI) come to mind, as they are radiation-hardened at the transistor level (rather than on a layout level like the above)

The point being, cheap off-the-shelf (COTS) processors may have supreme computing power, but they weren’t typically made with the aforementioned build and design techniques for hedges against the radiation environment.

However, a go-to method to get some level of radiation-hardening is to utilize N-modular redundancy. An example would be utilizing N=3 COTS processors hooked up together in what is called a “voting scheme”, where they essentially run the same processes in parallel and “vote” on the correct output. These schemes are redundant to single event upsets, but provide no advantage to TID and consume more SWaP (size, weight, and power).

If you can’t afford the SWaP or still need extra robustness to TID, this is where a different design philosophy enters the picture, and it begs the need to talk about risk tolerance.

Risk tolerance

Broadly speaking, NASA missions have quite a different risk tolerance than most private space companies. Baked into NASA’s design process is the lexicon of mission “class”, with a Class A mission like the James Webb Space Telescope having the lowest tolerance for risk.

On a mission like this, NASA is looking for “guarantees” so to speak, and a high “technological readiness level” (TRL) in all things mission-critical. The TRL is established by precedent, flying a brand new Nvidia GPU would likely break their risk budget based on a lack of precedent.

The commercial space enterprise faces quite different expectations, with risk tolerance almost completely informed by the budgets and scale of the project. SpaceX’s Starlink can accept slightly more risk tolerance, as there is an extreme amount of redundancy at the mission level. That is, if any one satellite fails, Starlink’s internet service is largely unaffected. In the regimes of probabilities, SpaceX can take on more margin of risk because they can lose a few satellites while remaining profitable.

Private and public space also differ drastically in development times. The time from funding to delivering a product to orbit tends to be much much quicker in private space. This is not a knock on NASA, but simply a phenomenon that comes along with bureaucratic funding structures and an extremely low tolerance for mission failure.

For this reason, the technology being used on a flagship NASA mission might be “frozen” 10 years in advance — meaning the design is anchored to a relatively fixed list of parts long before launch time. This is inherent to how NASA “phases” their development cycles, and can almost singlehandedly explain why the BAE RAD750 was just launched for Mars 2020.

Why desire premiere compute?

This begs the question, if NASA has been so successful all these years, then why bother trying to get the forefront of computing capabilities in space?

Quite simply, premiere compute will enable new possibilities. On the backdrop of this next era is a trend toward autonomy, which will undoubtedly demand more compute than ever. A class of algorithms used for entry, descent, and landing will need to leverage high-refresh-rate updates from LIDAR and camera inputs to perform real-time robust control. Essentially, doing any sort of reasoning about the environment (e.g. if crater == underneath me, guide spacecraft to land away from the crater), will necessitate fast computation in an extremely fast-evolving environment.

On this point, increased “autonomy” expresses itself in the form of AI and machine learning (ML) in space, which additionally opens an array of use cases for robotics – self-servicing, managing vehicle health, and automated guidance, control, and navigation (GNC). For more on autonomy in space, read Maggie’s article here.

With most current satellites focused on remote sensing (essentially, imaging the Earth), increased computing capabilities could have sweeping consequences for onboard decision-making. There are a host of companies that have a fountain of data being taken of Earth every moment, a lot of which is redundant or non-essential. Onboard ML algorithms could sort through non-essential data, deciding to downlink the best images, thus, saving communication bandwidth.

So yes, premier compute would be… quite nice for the future of space, and in some cases, the trend of big data, increased autonomy, and robotics will be bottlenecked without it.

The nuances to bend the rules

Commencing this next chapter where space is dominated by the private sector, quick mission phasing is positioned to apply pressure on conservative space ideals. With private companies becoming more involved with many aspects of public space missions (launch vehicle production, spacecraft creation, and payload creation), it will be interesting to see if this will subtly alter the TRL-based engineering design approach.

What I’m not saying is that private companies are risky and NASA is not. Rather, different funding structures and institutional dynamics cause this to be the broader trend. It is also important to point out that the broader profile of “what kinds of space missions are happening?” is changing these trends. Private space is very involved in LEO, where you can afford to be slightly more risky, as the radiation environment is inherently less harsh than deep space. While private space companies have grand plans of asteroid mining and activating the cis-lunar economy, we have seldom seen private companies venture into interplanetary environments.

The tendency to use radiation-hardened processors might be changing in LEO, but the greater radiation threats of deep space may cause even the most risk-tolerant companies to leverage the slower, but robust rad-hardened processors. A processor like the RAD750 will deliver guarantees on mission lifetime — guarantees that the shiny COTS processor simply cannot yield, unless there is some combination of rad-hardening by design, rad-hardening by process, redundancy, or extra shielding for TID.

However, if your mission is meant for LEO, N-modular redundancy and a voting scheme/silicon-on-insulator technology may deliver the level of robustness desired for a 5-year satellite lifetime. Especially if you’re a space startup that is trying to flash the most advanced payload in space, using COTS components will almost certainly be the path forward.

Where do we go now?

Risk tolerance deeply shapes how we build and what we are capable of. NASA and private space have generally different build philosophies, but I think we can learn from both. NASA didn’t need advanced AI/ML algorithms to land on Mars, and a few generations ago we landed on the moon with less computing power by orders and orders of magnitude. There is no doubt we can accomplish amazing things already without the most spec’d-out Nvidia GPU, but it’s an exciting time to think what could be accomplished without a compute bottleneck in space.

By being able to port the most advanced terrestrial AI/robotics to space, we could open up an entirely new realm of possibilities. A realm where we can extend mission lifetimes with autonomous spacecraft-servicing robots. A realm where we can parse through massive amounts of Earth imagery to learn about carbon emissions. A realm where we can have completely autonomous science missions to look for signs of life elsewhere in our solar system. Addressing this bottleneck will be fundamental to expanding humanity’s involvement in space. A combination of system-level redundancy, altering risk tolerance, and new radiation-hardening by process techniques will be instrumental in achieving this goal.

Further Reading

Compute

Radiation

Ballast 2015: A method for efficient radiation hardening of multicore processors

NASA slides on radiation-hardened electronics for the space environment

AI

Risk

Caveats

There are other ways of radiation-hardening that I’ve left out here for the sake of simplicity. If you think there is a crucial technology or physics explanation I’ve left out, please reach out to me at eick [at] mit [dot] edu — I would be happy to chat.